Research in the Archives: NERFC 2023-2024 Grant Applications Open

Northeastern University’s Archives and Special Collections is proud to be a member of the New England Regional Fellowship Consortium (NERFC), which is a collaboration of 31 cultural institutions across New England.

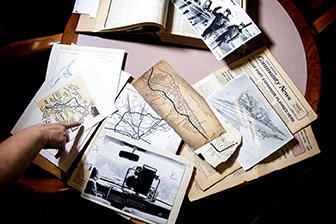

The fellowship program’s aim is to promote research across a wide variety of institutions and regions in New England. NERFC grants at least two dozen awards every year. Fellows receive a stipend of $5,000 with the requirement that they conduct their research in at least three of the participating institutions for periods of two weeks each. The diverse group of institutions in NERFC offer research opportunities in collections that span the region’s time period, from pre–European contact to the present day. Past awards have funded research on a wide array of topics conducted by scholars and independent researchers from across the US.

As one of the participating institutions, we encourage you to apply to make use of our records documenting Boston’s history of social justice activism, neighborhoods and public infrastructure, as well as records from individuals and organizations part of the city’s African American, Asian American, LGBTQA, Latinx, and other communities and make connections between our records and other NERFC institutions’.

Past NERFC fellows’ projects using Northeastern’s archival collections examined feminist health care centers, gay art and photography in 1970s Boston, links between socialist and feminist thought in Boston, and the history of Black intellectuals, to name a few.

The Archives and Special Collections encourages researchers in the Northeastern community and beyond to apply to NERFC’s fellowship program by the February 1, 2023, deadline.

Have questions about how to get started? Email Reference and Outreach Archivist Molly Brown: mo.brown@northeastern.edu.

To learn more about the application requirements and other participating institutions, please visit the New England Regional Fellowship Consortium website.