Research Support Newsletter – Fall 2025

This blog was originally sent as a newsletter for Research Support Staff at Northeastern University on September 3, 2025. If you would like to subscribe to receive future newsletters, please click here.

Did you know the library can help with…your grant proposal?

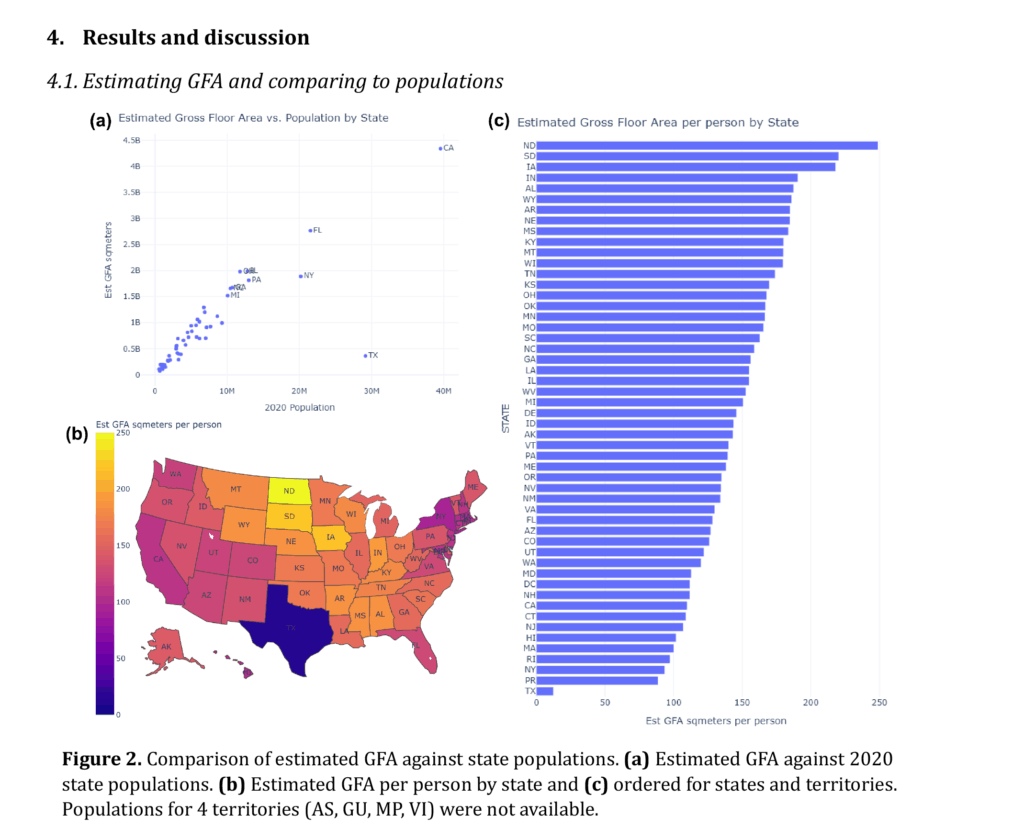

Join us for our Accelerate Your Proposal Development event! This program is a countdown of proposal-related questions the library can help with, including personalized support for crafting data management and sharing plans, improving your data visualizations and graphics, strategies for efficient literature reviews, and citation management. We’ll share information about the tools and people who can help you develop key proposal components and supplementary materials. Whether you’re in the early stages of developing your proposal or fine-tuning it before submission, we’re happy to work with you.

This virtual event takes place Wednesday, October 29, from noon – 1 p.m. Eastern time. Register here.

Did you know we have access to…tools and services to complete evidence syntheses?

This month, we are highlighting two ways the library can support your evidence synthesis project. Evidence synthesis projects, which often do not require funding, can reveal important research gaps, thus strengthening future grant applications. If you are working on (or considering working on) a systematic review, scoping review, rapid review, or meta-analysis, read on!

Evidence Synthesis Service: Northeastern University Library provides a tiered set of support services for evidence synthesis projects such as systematic reviews, ranging from expert librarian guidance to full research partnerships. See our website and service tiers for more information.

Covidence: Covidence is a web-based evidence synthesis support tool that assists in screening references, data extraction, and keeping track of your work. Covidence requires registration with a Northeastern email address. If you already have an account, please sign in.

Start Smart — Foundations of Evidence Syntheses: Starting September 15, the library will be running a virtual workshop series for faculty and research staff on planning for and embarking on an evidence synthesis project.

Have any questions about completing evidence syntheses? Reach out to our expert, Philip Espinola Coombs.

We want to hear from you!

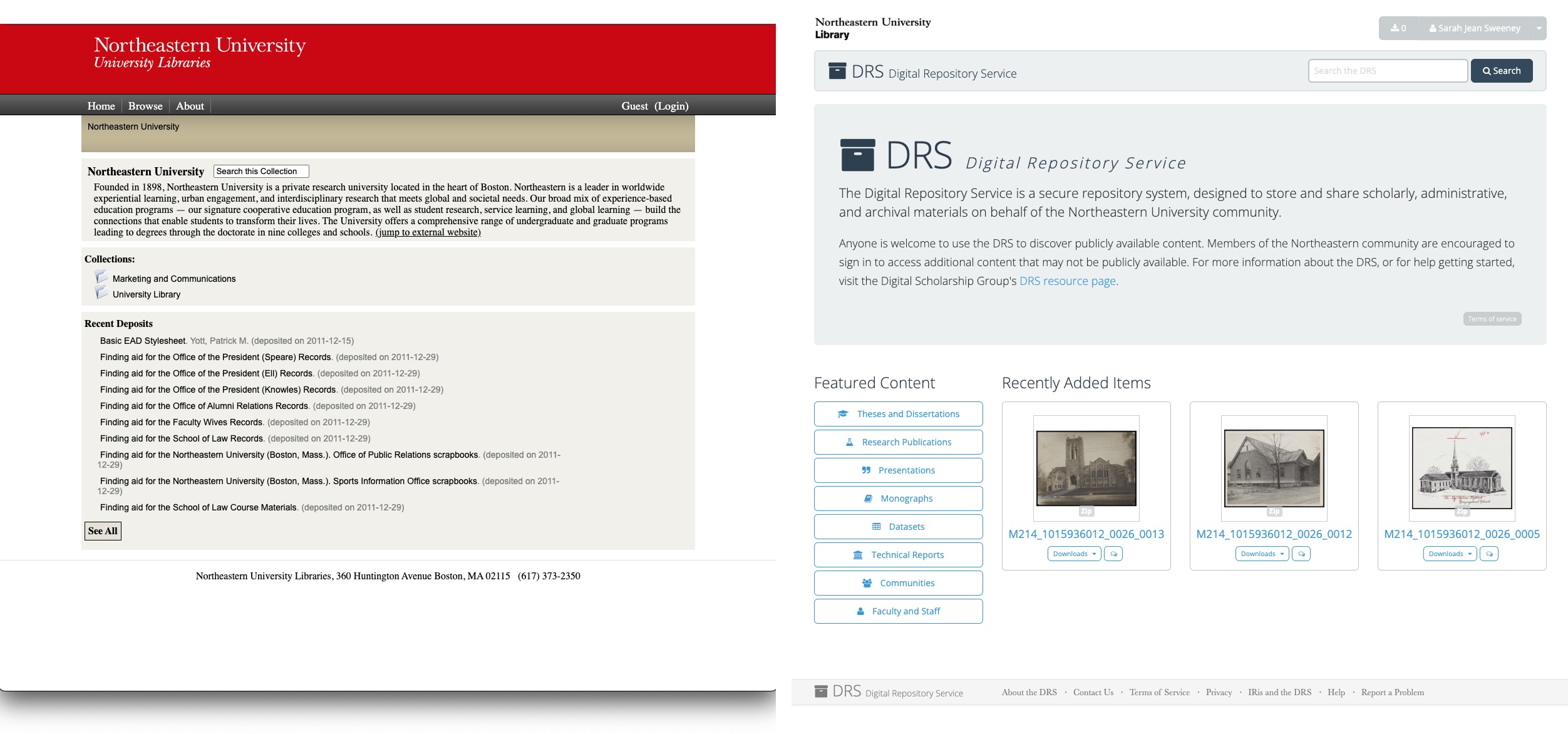

Research Data Storage Finder: We’re developing an interactive online tool to help researchers quickly narrow down the best platform for their data storage and archiving needs, and we’d love to hear what you think of what we’ve built so far. If you’d like to get a sneak peek and share your feedback, please let us know via this form.

That’s it!

Questions about the library? Email Alissa Link Cilfone, Head of STEM, or Jen Ferguson, Head of Research Data Services — we’d love to hear from you!